Optimisation Deep Dive – Especially for Mobile Devices

Mobile gaming is a key market alongside pc and console, but delivering great games means overcoming the unique performance challenges of handheld devices. Unlike PC or console development, CPU/GPUs are less powerful, memory bandwidth and capacity is more limited, and we have to contend with thermal throttling. Therefore as we are developing Drone Force Command to be cross platform optimisation has been essential.

Below we’re going to cover some of the key considerations and choices we have made so far during development to ensure our game is playable on mobile. Hopefully some of these are helpful for developing your own game if you are seeing low frame rates, device overheating, or battery drain.

Draw Calls and Batching

One of the most common CPU bottlenecks in Unity rendering stems from having too many draw calls. Each draw call is an instruction from the CPU telling the GPU to draw a mesh (or part of one) with a specific material and shader state. Sending these calls involves an overhead. Too many draw calls, and your CPU can’t feed the GPU fast enough, leading to low frame rates even if the GPU isn’t maxed out.

There are few options available to help reduce these draw calls, but not all are necessarily suitable for mobile devices so always test and profile your changes. Test these approaches if you have a large number of draw calls.

- Batching: Unity can combine multiple objects into fewer draw calls through batching:

- Static Batching: For non-moving objects sharing the same material. Unity can combine their geometry into large meshes at build time. Reduces draw calls significantly but increases memory usage and build times. Ideal for static level geometry.

- Dynamic Batching: For small meshes (~< 300 vertices) sharing the same material. Unity combines them on the fly each frame. Has CPU overhead itself and strict limitations, making it less effective on mobile. Often best left disabled unless profiling shows a benefit.

- GPU Instancing: This is a powerful technique, especially useful on mobile. It allows the GPU to render multiple identical meshes (using the same material) in a single draw call. Differences (like position, rotation, colour) are handled via per-instance data buffers.

- Ideal Use Cases: Dense / repeated objects like foliage, debris, crowds, or in our case – Drones!

- SRP Batcher (URP/HDRP): If using the Universal Render Pipeline (URP) or High Definition Render Pipeline (HDRP), the SRP Batcher dramatically reduces the CPU cost of material setup. It batches draw calls that use the same shader variant, regardless of different material data. Ensure materials use compatible SRP Batcher shaders.

Use the Frame Debugger and Profiler to identify draw call counts. Reduce your draw calls where it makes sense and shows real benefit on your target devices. For our game we have found GPU instancing to be invaluable, enabling the rendering of thousands of units / drones, with only one draw call per unit type!

Geometry – LOD & Imposters!

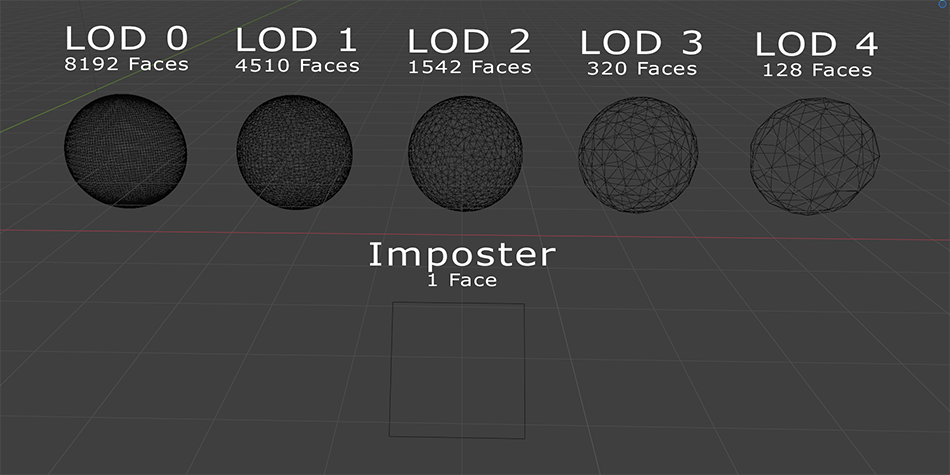

For complex objects, especially in the distance, rendering the full mesh every frame is wasteful. There are a few approaches to significantly simplify your mesh geometry while barely being noticeable. The image below illustrates how Levels of Details & Imposters impact geometry. While different LOD keeps the same shaders/textures as the original mesh, an Imposter is simply a projected view of the mesh captured previously.

- Level Of Detail (LOD)

- This techniques mitigate high vertex counts by simplifying an object’s mesh geometry as it moves further from the camera, reducing the vertex count and thus lessening the GPU’s vertex shader workload and data transfer requirements.

- While excessive vertex counts might be less critical than draw calls on modern mobile hardware, they still impact performance, especially for skinned meshes.

- Different approaches to implementing LOD exist, either automatic of manual approaches can be used

- Imposters

- Imposters are almost like an extreme version of LOD, the geometry is simplified to a very basic 2D plane (or similar)

- Instead of textures they use pre-rendered views of the object from various angles stored in a texture atlas. At runtime, imposters select the appropriate atlas image based on the camera’s view angle relative to the object.

- This enables replacing many thousands of polygons and multiple draw calls (for complex objects) with a single quad or simple mesh, drastically reducing rendering cost for objects.

- Specific shaders and tools/scripts to generate the imposter textures/meshes are required. Several assets exist on the Unity Asset Store, or you can implement custom solutions as we have done.

We have made significant use of both of these approaches. Our unit/drone objects use LOD so the meshes are simplified the further out the camera zooms (this is also combines with the GPU instancing for a double benefit). Almost all other elements within our world space are rendered as imposters – due to our unique animated imposter system we can produce pre-recorded complex particle effects with very little runtime performance hit.

Shaders

Once the CPU sends instructions, the GPU takes over. Mobile GPUs are powerful but are always going to be more limited than modern desktop cards. A bad shader can cripple performance unnecessarily. We prefer to write our shaders in code, as you have more control, but these approaches also apply to using shader graph.

- Write Efficient Shaders

- Fragment Shader: The fragment (pixel) shader can often be a bottleneck. Minimise complex calculations (pow, exp, sin, cos), texture lookups, and conditional branching (if statements).

- Texture Optimisation: Use compressed texture formats suitable for your target platform. Keep texture resolutions as low as acceptable. Minimise the number of texture samples per pixel. Consider channel packing (storing multiple greyscale maps in one RGBA texture).

- Math Precision: Use half precision for colours and intermediate calculations where possible, instead of float.

- Custom HLSL: Writing shaders in HLSL is more complex than Shader Graph, but you can write highly specific, optimised shaders, bypassing some overhead and abstractions of visual approaches

- Reduce Overdraw

- Overdraw occurs when the same pixel on screen is rendered multiple times within a single frame (e.g., overlapping transparent UI elements, dense particle effects, layers of foliage). Each layer adds fragment shader cost.

- Use Unity’s Scene View Overdraw mode to visualise problem areas.

- Minimise transparent surfaces or use alpha testing (cutout) instead of alpha blending where possible. Alpha testing is usually more efficient but gives hard edges.

- Simplify particle effects or use fewer, larger particles.

Fundamentally you want to get the best effects with the least work. Make sure you aren’t doing any unnecessary calculations, and where the visual impact is minimal then simplify things. Keep fragment shaders simple, minimise texture usage and overdraw.

GPU Compute & Parallel Processing

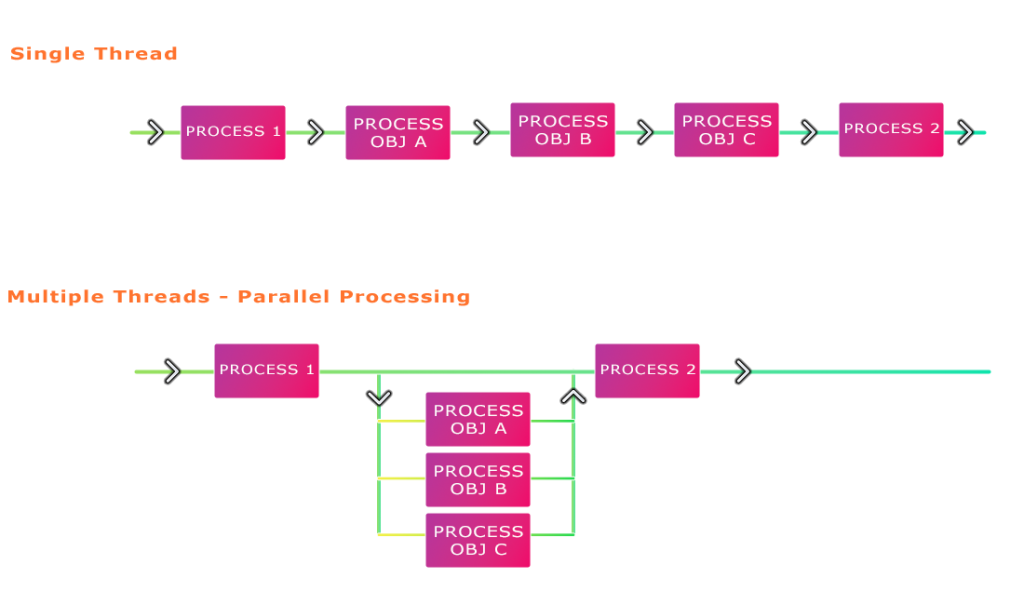

Modern GPUs aren’t just for drawing triangles! Their massively parallel architecture is perfect for certain calculations. Similarly, modern mobile CPUs have multiple cores. By using either compute shaders on the GPU, or Unity Jobs System on the CPU we can leverage these parallel processing capabilities. The diagram below illustrates the benefits of the Unity Job System, but applies to all parallel processing!

- GPU Compute Shaders:

- Compute Shaders allow us to offload suitable parallel tasks from the CPU to the GPU. The main downside is that retrieving data from the GPU is slow and inefficient, so only bring back important data to the CPU when necessary.

- These are especially important for our game, given the large number of units and physics heavy simulation. They are also well suited to tasks such as: flocking algorithms, fluid dynamics, procedural animation and pathfinding on grids.

- Unity Job System & Burst Compiler

- This is Unity’s answer to CPU parallel processing written in C# code (and allowing Burst-compatible syntax). Unity schedules these jobs across multiple worker threads. The Burst compiler translates this C# into highly optimised native machine code. These systems can provide massive CPU performance improvements compared to single-threaded C# code and allow for keeping the main thread free for other tasks.

- These systems are well suited to tasks like complex gameplay logic, custom physics queries/calculations, AI behaviour updates, large array processing, procedural generation.

- Unity ECS (Entity Component System)

- ECS is a data-orientated approach to coding. While not parallel processing directly, as it works alongside the Jobs System & Burst Compiler it feels relevant to this section. These combined architectures (DOTS) promotes better CPU utilisation and highly efficient iteration / parallel processing.

- In ECS you have Entities (ID, acting as a container), Components (data structures holding specific attributes like position, velocity) and Systems (the logic) which operate on entities possessing specific combinations of components, transforming their data.

Compute shaders are a fundamental part of our game, and provide massive performance benefits, but can be much more tricky to work with than normal C# CPU rendering. The Jobs System/Burst Compiler is much easier to pick up and add into existing projects. Fundamentally you should determine if you are CPU or GPU limited to determine if these optimisations are necessary.

We have not used ECS directly in our work, but compute shaders essentially require an ECS like data structure, so could be considered to be a GPU version of ECS in our implementation.

Graphics APIs: Vulkan vs. OpenGL ES

Ignoring iOS (where Metal is your only option), Unity supports multiple graphics APIs on Android. When developing our game we started with Open GL due to its slightly higher number of devices supported, but found lots of issues and limitations. This prompted us to look again at Vulkan.

While support on android devices only really started in 2018/2019 we are now at the point where it is widespread enough that Vulkan is a viable option for mobile development. Official android figures from April 2024 put coverage at 85%, which will be growing all the time and is likely to be much higher among our target gaming demographic. Vulkan is generally a much more efficient option so we recommend making the switch!

- OpenGL ES: The older, more widely compatible standard. Higher driver overhead on the CPU.

- Vulkan: A newer, lower-level API. Significantly reduces CPU driver overhead (can help if CPU-bound on draw calls), better support for multi-threaded rendering submission (less main thread bottle-necking), more explicit control (though Unity handles most of this).

Final Thoughts: Profile, Iterate, Balance

Unfortunately optimisation isn’t a one-time task – it’s an ongoing process you will need to revisit and adapt to as your game grows. So when you are approaching this task:

- Profile Regularly: Use the Unity Profiler and the Frame Debugger constantly. Identify your actual bottlenecks on target mobile devices – don’t guess!

- Iterate: Make one change at a time and measure its impact.

- Balance: Optimisation often involves trade-offs (e.g., memory vs. processing, accuracy vs. speed). Find the right balance for your game’s visual goals and target hardware.

Achieving great performance on mobile requires understanding both CPU and GPU limitations and applying a range of techniques. Hopefully this post gives you some useful tips for optimising development on mobile devices. Good luck!