Supercharging performance!

For a while we kept hearing about the Unity jobs system and burst compiler, but mostly ignored it as the majority of intensive processing we have been on is based on the GPU, which is essentially an alternative. But recently when developing our formations generator it became clear that we needed to speed up some of the processes, so delved head first into the mysterious world of Unity Jobs and the Burst Compiler.

First off, lets get an overview of what they are.

Unity Jobs System

Typically in Unity C#, code you write runs on a single main thread. Everything happens sequentially one after another (unless you use asynchronous functions), and even in those situations nothing really happens at the same time. But you might have heard, we have multi-core CPUs these days!

Most PC processors typically have at least 8/16 threads these days. If you never lived/read through the history its quite interesting how we transitioned from single core to multi-core processors when CPU frequency limits were hit. Unfortunately most programming languages (or the languages today’s languages are derived from) were created prior to this, meaning multi core support is often an afterthought.

Unity Jobs system is Unity’s way of letting your code take advantage of all these extra wasted cores/threads. At it’s simplest it splits up a repetitive piece of code over the multiple threads, so that each thread can solve one ‘instance’ of the code, but crucially multiple threads can be doing this at once. Fantastic! We can now make everything 8/16x faster? Not quite. There are limitations, we can’t just run everything on all the threads because most code depends on the results of previous code, the order we do things in matters. In lots of cases we simply have to wait to get the answer to one line of code, before we can begin the next.

Limitations:

• Code must be able to run in parallel with the main thread.

• Has limited scope and can only access data which is provided to them

• Its slightly tricky to get cumulative values from parallel jobs (e.g. Total # Jobs that return Value X)

Best For:

• Repetitive code that can be parallelised

Unity Burst Compiler

It’s possible you don’t know, but the code you write in C# is not the code which gets runs by your processor. Code will go through one or more processes called compilation, which translates you code into different languages, the final one of these being native machine code that runs directly on your CPU. The specifics vary depending on your target platform and unity settings (for more information read up on JIT and AOT compilation, CLI and IL2CPP).

Now these compilers often have to work with the entire scope of a language, and to work on a variety of processor types with different capabilities. The burst compiler takes a different approach. The main one of these is that it compiles directly to machine code for specific target platforms. This will often mean producing multiple versions. But the benefit comes from the fact that it is doing all this in advance for very specific targets. The burst compiler can be much more aggressive in terms of optimisation because it knows exactly how

Also, there are limits to what can be done inside burst compiled code, but the upside of this is that what it does do, it can do much better as it it super focused!

Limitations:

• Only specific C# code can be burst compiled

• Only works within a Unity Job

Best For:

• Repeted heavy maths

• Combining with the parallel processing jobs system

You can probably see that the best case scenario is burst compiled code running in parallel with Unity Jobs, but even using one or the other will still provide substantial benefits.

Putting It Into Practice

For our specific issue in our formation generator we are doing lots of work on a 3D grid of points. At one point we need to make a number of adjustments to all the points (copying across sorting and applying scaling). While this could be done in a standard loop, the is definitely a case for parallel processing, and may also benefit from the burst compiler. Let’s take a look:

public struct PointInside {

public float edge;

public float sqrMagnitude; // Pre-calculated squared magnitude - now public and directly set

public int2 unitOrder; //.x is XY sorted values (for compute shaders), .y is Unit Priority (i.e. used to select N units)

public float3 xyz;

}

[BurstCompile]

public struct CopyPointInsideAndAdjustJob : IJobParallelFor {

[NativeDisableParallelForRestriction] //Required to write to index that is not i

public NativeArray<PointInside> pointsOrigSort; // NativeArray to sort

[ReadOnly] public NativeArray<PointInside> pointsSorted; // NativeArray to sort

[ReadOnly] public float GridSizeRescaler; //Allows for rescaling of points to 1-unit spacing

public void Execute(int i) {

//Read in original XZ sorted item based on unit Order

PointInside point = pointsOrigSort[pointsSorted[i].unitOrder.x];

// Assign the new index as the ID.

point.unitOrder.y = i;

//Rescale to 1-unit distance

point.xyz *= GridSizeRescaler;

pointsOrigSort[pointsSorted[i].unitOrder.x] = point; // Write back the modified struct.

}

}There is quite a bit going on there so lets break it down. First of all we have the definition of out PointInside struct. This just contains information we needs about our formation points.

public struct PointInside {

public float edge;

public float sqrMagnitude; // Pre-calculated squared magnitude - now public and directly set

public int2 unitOrder; //.x is XY sorted values (for compute shaders), .y is Unit Priority (i.e. used to select N units)

public float3 xyz;

}Next the Job declaration. For a job to be burst compiled by Unity, it must have [BurstCompile] immediately before the definition. For the Job itself there are a number of Job interfaces that can be used, but typically you only need to choose between two: IJobParallelFor and IJob. As the names might suggest IJob runs on a single worker thread, and IJobParallelFor runs in parallel on multiple threads.

[BurstCompile]

public struct CopyPointInsideAndAdjustJob : IJobParallelFor {We’ve chosen a parallel job, because each loop of the process is independent. the next line you see is not always required. Normally Unity expects you to use a provided index to access any arrays (i in the full code above). This is safest as you can be certain that no jobs is going to interfere with the data being used on a different job, however part of our function specifically requires using a different index, so the option below is required

[NativeDisableParallelForRestriction] //Required to write to index that is not iNow we define the data each Job instance will have access to. We will pass data into here when running the job which I’ll show later. The first two items are NativeArrays of our PointInside struct defined earlier. If you aren’t familiar with NativeArrays then in simplest terms they are similar to regular array except that they are in unmanaged memory, while regular array are automatically managed. This means we just need to be more careful to explicitly dispose of them once done, as Unity will not automatically clear the memory and we can get leaks.

The third input is just a float scaling factor. You will notice the addition of [ReadOnly]. You can also use [WriteOnly]. This information helps the burst compiler in its optimisation processing.

public NativeArray<PointInside> pointsOrigSort; // NativeArray to sort

[ReadOnly] public NativeArray<PointInside> pointsSorted; // NativeArray to sort

[ReadOnly] public float GridSizeRescaler; //Allows for rescaling of points to 1-unit spacingAnd finally we have the job function. This should be called Execute, and when using IJobParallelFor will and an job index i, for IJob there will be no index and it will all be run on a single worker. The function itself is fairly self explanatory.

public void Execute(int i) {

//Read in original XZ sorted item based on unit Order

PointInside point = pointsOrigSort[pointsSorted[i].unitOrder.x];

// Assign the new index as the ID.

point.unitOrder.y = i;

//Rescale to 1-unit distance

point.xyz *= GridSizeRescaler;

pointsOrigSort[pointsSorted[i].unitOrder.x] = point; // Write back the modified struct.

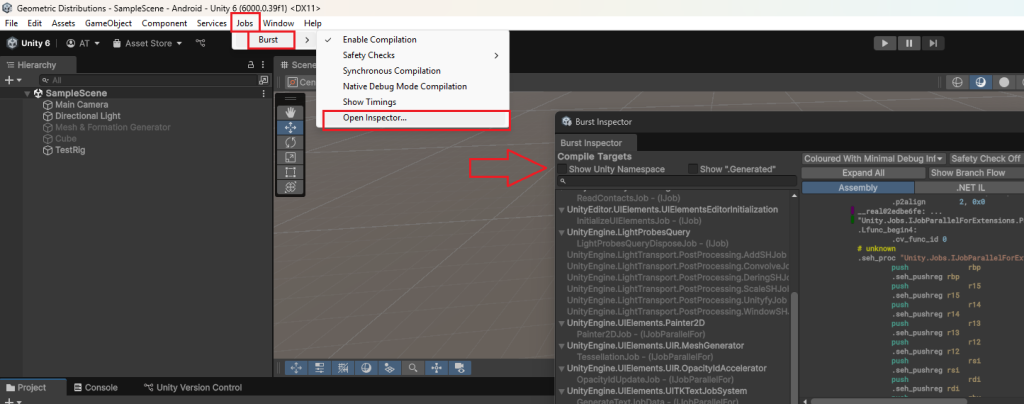

}Great, so we’ve written our first Burst Compiled Job! As mentioned before, Burst Compilation does not work on all code, so there’s no guarantee that unity has actually burst compiled it. Fortunately there is an easy way to check. Just open the burst inspector!

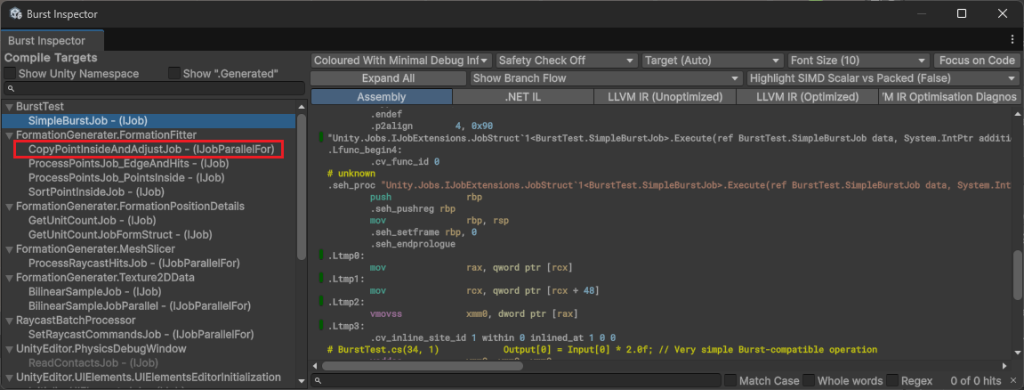

If you Job is in bold then it has compiled properly and Unity is happy. You can the job we just crated in the example below. Before writing this post we also introduced a number of other jobs, and you can see Unity has grouped them by namespace and class.

Ok. So we have now successfully define a Burst Compiled Job. The next step is to use it. This isn’t quite as straightforward as calling a function, but only requires a few extra steps. I’ve also included some examples of how to create, populate and dispose of native arrays. This isn’t the most efficient example (and we actually keep our objects as NativeArrays for much longer), but would be a sensible approach if you were only going to be using one Job on the array.

It’s also worth noting that once the job is Burst Compiled, you don’t need to do anything specific when running it, Unity will just know it is Burst Compiled when it comes to running it.

NativeArray<PointInside> nativePointsOrigSort = new NativeArray<PointInside>(Points.Length, Allocator.TempJob);

NativeArray<PointInside> nativePointsInside= new NativeArray<PointInside>(Points.Length, Allocator.TempJob);

//--------------------------------------------------

// Various other code populating nativePointsInside

//--------------------------------------------------

//Copy from managed array to native array

Points.CopyTo(nativePointsOrigSort);

//In parallel copy these values back onto the original XZ sorted array & make final points adjustments (Rescaling)

var sortJob = new CopyPointInsideAndAdjustJob

{

pointsOrigSort = nativePointsInside,

pointsSorted = nativePointsOrigSort,

GridSizeRescaler = GridSizeRS

};

JobHandle sortJobHandle = sortJob.Schedule(nativePointsOrigSort.Length,64);

sortJobHandle.Complete(); // Wait for job completion

//Copy back to managed array;

Points = nativePointsOrigSort.ToArray();

nativePointsInside.Dispose(); // IMPORTANT: Dispose of NativeArray

nativePointsOrigSort.Dispose(); // IMPORTANT: Dispose of NativeArrayOk, let’s break down this code as well the. First off, creating the Native Arrays. It is the same as creating a regular array, except for the final parameter which tells unity how long you expect the Native Array to persist. Unity documentation details the different types. But we’ve found either Allocator.TempJob or Allocator.Persistent are most useful depending on how long you plan to keep your Native Array.

The final line below shows a good example of getting data from our Managed Array, Points, into the unmanaged Native Array. There are fairly efficient functions CopyTo and CopyFrom which can perform these actions for you (along with more parameters defining where to copy from/too in more detail). In our example the Native Arrays have been defined as the same size as the Points array, so we don’t need any extra parameters.

NativeArray<PointInside> nativePointsOrigSort = new NativeArray<PointInside>(Points.Length, Allocator.TempJob);

NativeArray<PointInside> nativePointsInside= new NativeArray<PointInside>(Points.Length, Allocator.TempJob);

//--------------------------------------------------

// Various other code populating nativePointsInside

//--------------------------------------------------

//Copy from managed array to native array

Points.CopyTo(nativePointsOrigSort);Next we come to the meat of the code. Actually Scheduling the job. Our first line defines sortJob, an instance of the Job that we want to run right now, taking our Job definition and the inputs we want to use. We can then schedule our job instance by calling Schedule on it (which returns a JobHandle for it). The first Schedule parameter defines the number of ‘loops’ we want to perform in total, and the second is the batch size. A batch size of 32 or 64 often works well, but will depend on the systems being used. (These parameters are not need is just using a single Worker thread with IJob.)

Then at the end we need to call Complete() on our Job Handle. This ensures that the code waits for the parallel job to complete before continuing further

//In parallel copy these values back onto the original XZ sorted array & make final points adjustments (Rescaling)

var sortJob = new CopyPointInsideAndAdjustJob

{

pointsOrigSort = nativePointsInside,

pointsSorted = nativePointsOrigSort,

GridSizeRescaler = GridSizeRS

};

JobHandle sortJobHandle = sortJob.Schedule(nativePointsOrigSort.Length,64);

sortJobHandle.Complete(); // Wait for job completion

Finally once we are certain the Job is complete we can copy the results we want back into our managed array and dispose of our Native Arrays. As mentioned before, dispose of the Native Array when finished with them is VITAL. Otherwise you will end up with memory leaks and an unstable application.

//Copy back to managed array;

Points = nativePointsOrigSort.ToArray();

nativePointsInside.Dispose(); // IMPORTANT: Dispose of NativeArray

nativePointsOrigSort.Dispose(); // IMPORTANT: Dispose of NativeArrayAnd that’s it! There’s a bit of setup, and a few rules which limit what you can and cannot do within Jobs and Burst Compiled code, but we found after writing a few of them it becomes much more straightforward. Also, as a final point, even though they will often be faster, make sure you benchmark you implementations against main threaded code as there is an overhead to using the jobs system that will not always be worthwhile!